Building a scalable backend with Kubernetes

Building a Scalable Backend with Kubernetes

Kubernetes has revolutionized the way we deploy and manage containerized applications. By providing a scalable and flexible platform for building and deploying backend services, Kubernetes has become the go-to choice for many organizations. In this article, we will dive deeper into building a scalable backend with Kubernetes, exploring the key concepts, tools, and best practices to ensure a robust and efficient application.

What is Kubernetes?

Kubernetes (also known as K8s) is an open-source container orchestration system for automating the deployment, scaling, and management of containerized applications. It was originally designed by Google, and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes provides a platform-agnostic way to deploy, scale, and manage containers across multiple hosts.

Benefits of Using Kubernetes

So, why use Kubernetes to build a scalable backend? Here are just a few benefits:

- Scalability: Kubernetes makes it easy to scale your application horizontally (add more replicas) or vertically (increase resources for each replica).

- High Availability: Kubernetes provides self-healing capabilities, which means that if a node goes down, the pods on that node will be automatically rescheduled to other available nodes.

- Flexibility: Kubernetes supports a wide range of container runtimes, including Docker, rkt, and cri-o.

- Efficient Resource Utilization: Kubernetes provides efficient resource utilization by allowing you to specify resource requests and limits for each pod.

Designing a Scalable Backend with Kubernetes

When designing a scalable backend with Kubernetes, there are several key components to consider:

- Pods: Pods are the basic execution unit in Kubernetes. They represent a single instance of a running application.

- ReplicaSets: ReplicaSets ensure that a specified number of replicas (identical pods) are running at any given time.

- Deployments: Deployments provide a way to manage the rollout of new versions of an application.

- Services: Services provide a network identity and load balancing for accessing a group of pods.

- Persistent Volumes: Persistent Volumes provide persistent storage for data that needs to be preserved across pod restarts.

Stateless and Stateful Applications

When designing a backend application, it's crucial to understand the difference between stateless and stateful applications. Stateless applications, such as RESTful APIs, do not store any data or maintain a session state between requests. Each request is processed independently, making it easier to scale the application horizontally.

Stateful applications, on the other hand, maintain data or session state between requests. These applications require more careful planning and design to ensure scalability and high availability. Kubernetes provides features such as StatefulSets and Persistent Volumes to help manage stateful applications.

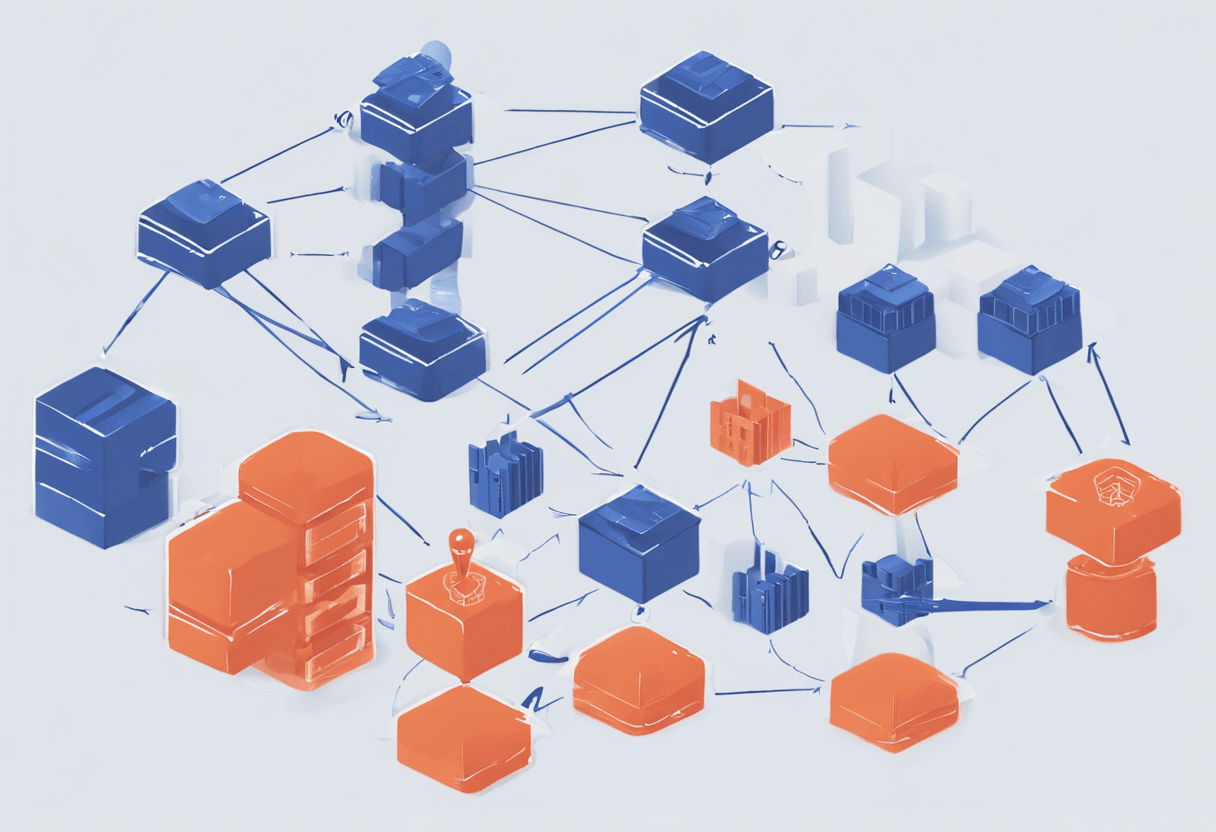

Kubernetes Architecture

Before we dive into building a scalable backend, it's essential to understand the Kubernetes architecture. The architecture consists of:

- Pods: The basic execution unit in Kubernetes, comprising one or more containers.

- ReplicaSets: Ensure a specified number of replicas (identical Pods) are running at any given time.

- Deployments: Manage the rollout of new versions of an application.

- Services: Provide a network identity and load balancing for accessing applications.

- Persistent Volumes: Provide persistent storage for data.

Designing a Scalable Backend

To design a scalable backend, we need to consider several factors, including:

- Microservices Architecture: Break down the application into smaller, independent microservices that communicate with each other using RESTful APIs or message queues.

- Service Discovery: Implement service discovery mechanisms, such as DNS or etcd, to allow services to find and communicate with each other.

- Load Balancing: Use Kubernetes Services or third-party load balancers to distribute incoming traffic across multiple replicas.

- Horizontal Pod Autoscaling: Configure HPA to scale the number of replicas based on CPU utilization or other metrics.

Building a Scalable Backend with Kubernetes

Let's consider a simple example of building a scalable backend for a RESTful API using Kubernetes. We will use a Node.js application as our backend service, and MongoDB as our database.

Step 1: Create a Docker Image

First, we need to create a Docker image for our Node.js application. We will use a Dockerfile to define the build process:

FROM node:14

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

EXPOSE 3000

CMD ["node", "index.js"]

Step 2: Create a Kubernetes Deployment

Next, we will create a Kubernetes Deployment to manage the rollout of our application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend

spec:

replicas: 3

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- name: backend

image: <docker-image-name>

ports:

- containerPort: 3000

Step 3: Create a Kubernetes Service

We will create a Kubernetes Service to provide a network identity and load balancing for our application:

apiVersion: v1

kind: Service

metadata:

name: backend

spec:

selector:

app: backend

ports:

- name: http

port: 80

targetPort: 3000

type: LoadBalancer

Step 4: Configure Horizontal Pod Autoscaling

Finally, we will configure HPA to scale the number of replicas based on CPU utilization:

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: backend

spec:

selector:

matchLabels:

app: backend

minReplicas: 3

maxReplicas: 10

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: backend

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

Conclusion

Building a scalable backend with Kubernetes requires careful planning and design. By understanding the key concepts, tools, and best practices, we can create a robust and efficient application that can scale to meet the demands of our users. In this article, we have explored the design considerations and steps to build a scalable backend using Kubernetes. By following these steps, we can ensure that our application is scalable, highly available, and efficient.

Additional Considerations

When building a scalable backend with Kubernetes, there are several additional considerations to keep in mind:

- Monitoring and Logging: Implement monitoring and logging tools, such as Prometheus and Fluentd, to ensure visibility into application performance and issues.

- Security: Implement security measures, such as network policies and secret management, to protect the application and its data.

- CI/CD Pipelines: Implement CI/CD pipelines to automate the build, test, and deployment of the application.

By considering these additional factors, we can ensure that our scalable backend is not only efficient and scalable but also secure, reliable, and maintainable.

Final Thoughts

Building a scalable backend with Kubernetes is a complex task that requires careful planning and design. By understanding the key concepts, tools, and best practices, we can create a robust and efficient application that can scale to meet the demands of our users. By following the steps outlined in this article, we can ensure that our application is scalable, highly available, and efficient. Remember to consider additional factors, such as monitoring and logging, security, and CI/CD pipelines, to ensure a comprehensive and reliable solution.